Symbolic Analysis, Google MusicLM & DetectGPT - CS News #4

Welcome to CS News #4!

The CS newsletter helps you keep track of the latest news in different technology domains like AI, Security, Software development, Blockchain/P2P and discover new interesting projects and techniques!

💡Project Highlight: angr a symbolic analysis framework

angr is an Open Source binary analysis platform for Python. It can analyse a binary statically (ie without executing it) but also dynamically (during the actual execution) and can be used to perform symbolic analysis of a program.

Symbolic Analysis is a multiple steps process that can be used to find the inputs for a program that matches certain conditions (ex: find an input that bypasses some logic checks of the program) where angr can be used to:

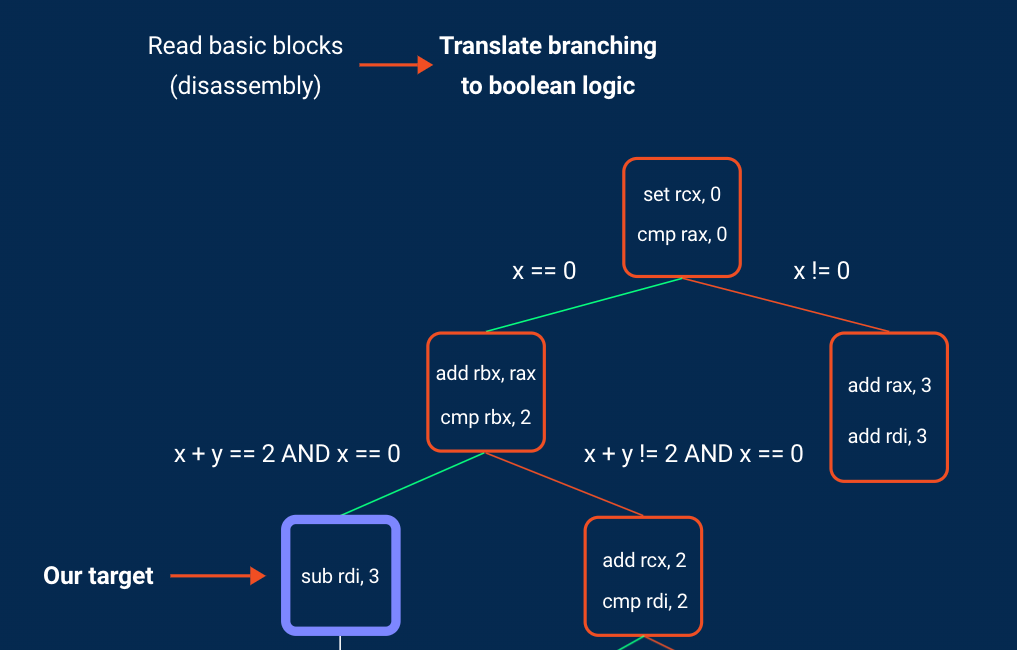

disassemble the binary to get its basic blocks, and conditional branchings

represent conditional branching as boolean logic

use a SAT solver, z3, a theorem prover made by Microsoft to check if the different constraints are satisfiable for some inputs values or not

compute an input that matches the different conditional branchings to finally execute the part of the program you want

Here is an example of how you can solve a defcamp challenge which only prints “Nice!” when a certain input is given to the challenge binary using angr in only a few lines:

>>> import os

>>> import angr

# load the challenge binary

>>> project = angr.Project("defcamp_quals_2015_r100", auto_load_libs=False)

>>> simgr = project.factory.simgr()

# tell angr to find a way to execute the part of the binary outputing the "Nice!" string

>>> simgr.explore(find=lambda path: 'Nice!' in path.state.posix.dumps(1))

# print the first solution found

>>> print(simgr.found[0].state.posix.dumps(0))

Code_Talkers

$ ./defcamp_quals_2015_r100

Enter the password: Code_Talkers

Nice!

🎶 MusicLM

Google Research introduced MusicLM, a model generating high-fidelity music from text descriptions outperforming previous systems both in audio quality and adherence to the text description. MusicLM can be conditioned on both text and a melody in that it can transform whistled and hummed melodies according to the style described in a text caption.

bella ciao - generated a cappella chorus

bella ciao - generated electronic synth lead

Furthermore Google Research publicly released MusicCaps, a dataset composed of 5.5k music-text pairs, with rich text descriptions provided by human experts. However, as stated by techcrunch Google will not release access to the model as it fears copyright strikes.

👉 Discover MusicLM

🧐 DetectGPT

ChatGPT has democratised and shown the usage and potential of Large Language Models (LLM). With these and potential harmful applications (bot, spamming, fishing, etc) comes a question, is it possible to distinguish human-written text from auto-generated text?

To address this issue researchers from Stanford University published DetectGPT a new curvature-based criterion for judging if a passage of a text is generated from a given LLM. Its method demonstrates that text sampled from an LLM tends to occupy negative curvature regions of the model's log probability function, thus without requiring training a separate classifier, collecting a dataset of real or generated passages, or explicitly watermarking generated text, it outperforms existing zero-shot methods for model sample detection.

Interesting stuff

Part 2 in series of posts covering different topical areas of research at Google

Understanding Finetuning for Factual Knowledge Extraction from Language Models

PushWorld: A benchmark for manipulation planning with tools and movable obstacles

Apple is reportedly working on a way to make AR apps that’s as simple as talking to Siri